Research

RAVEN: Realtime Accessibility in Virtual ENvironments for Blind and Low-Vision People

Conditionally accepted at CHI 26 (See Preprint) - ASSETS 25 Demo Paper 🏅 Best Demo Award - CHI 25 Late Breaking Work - Video

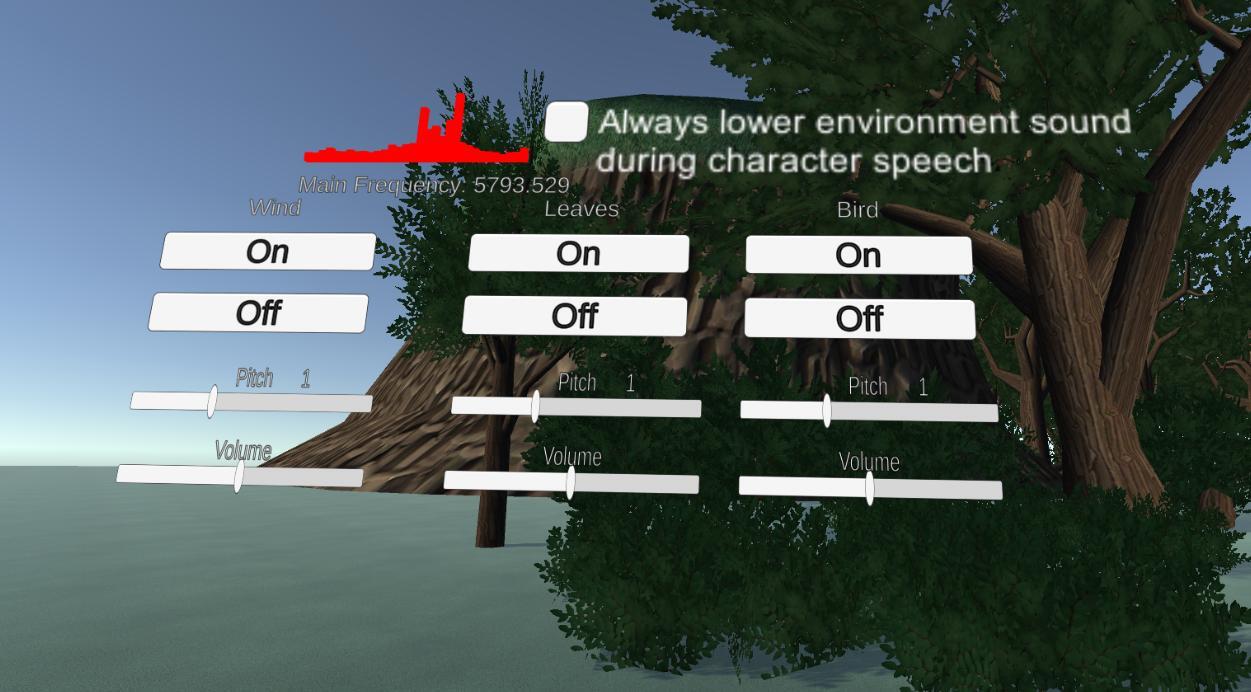

Xinyun Cao, Kexin Phyllis Ju, Chenglin Li, Venkatesh Potluri, Dhruv Jain

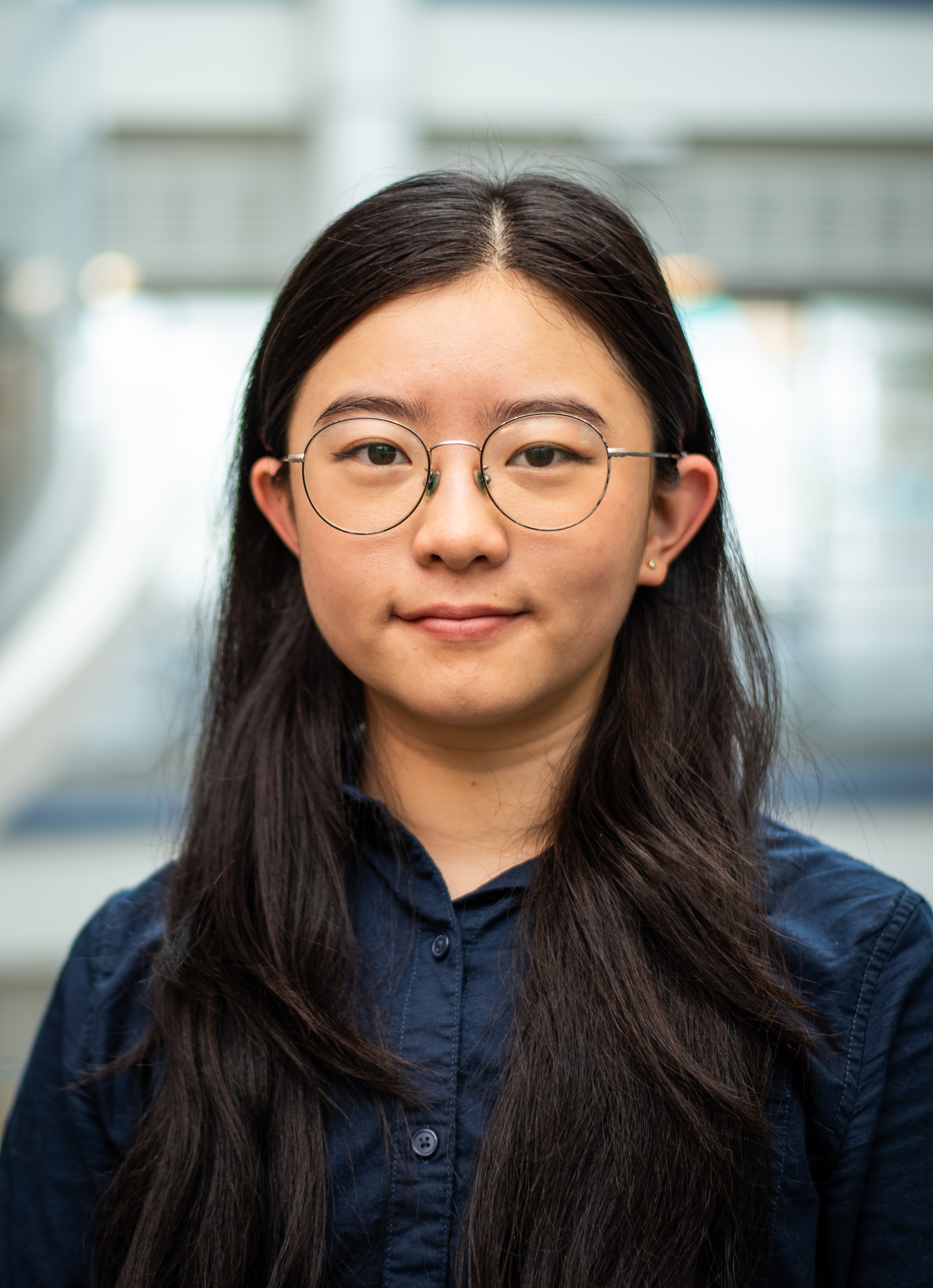

In this work, we present RAVEN, a system that responds to query or modification prompts from BLV users to improve the accessibility of a 3D virtual scene at runtime. We evaluated the system with eight BLV people, uncovering key insights into the strengths and shortcomings of generative AI-driven accessibility in virtual 3D environments, pointing to promising results as well as challenges related to system reliability and user trust.

SoundModVR: Sound Modifications in Virtual Reality to Support People who are Deaf and Hard of Hearing

ASSETS 24 Paper + Demo Paper - UIST 24 Demo Paper - Video

Xinyun Cao, Dhruv Jain

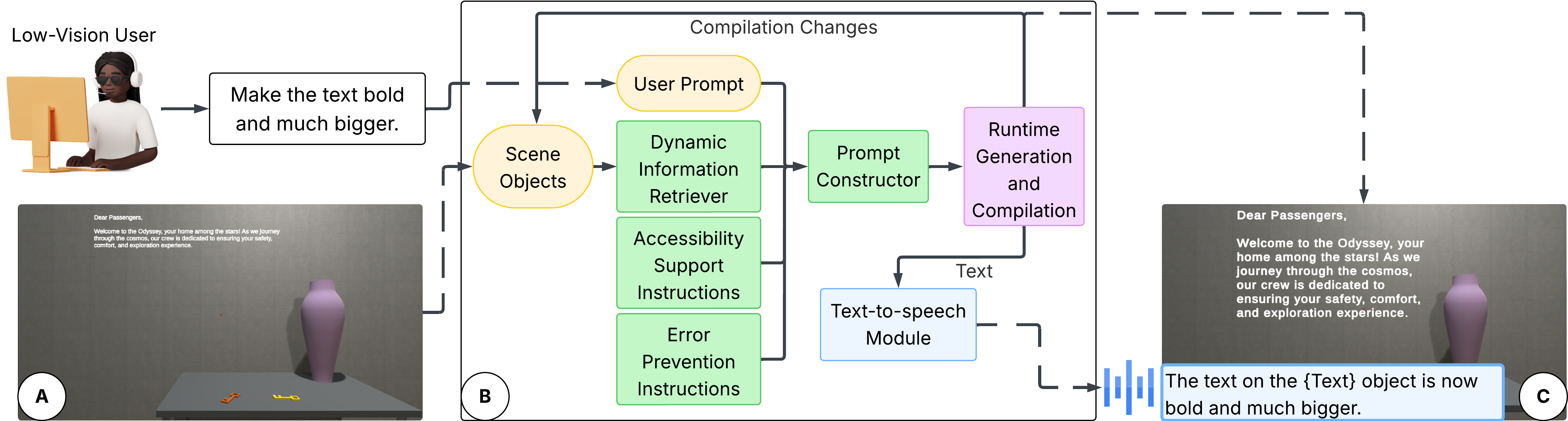

In this paper, we explore the possibilities of modifying sounds in VR to support DHH people. We designed and implemented 18 VR sound modification tools spanning four categories, including prioritizing sounds, modifying sound parameters, providing spatial assistance, and adding additional sounds. We evaluated the tools with DHH people and Unity VR Develoeprs.

Dual Body Bimanual Coordination in Immersive Environments

James Smith, Xinyun Cao, Adolfo Ramirez-Aristizabal, Bjoern Hartmann

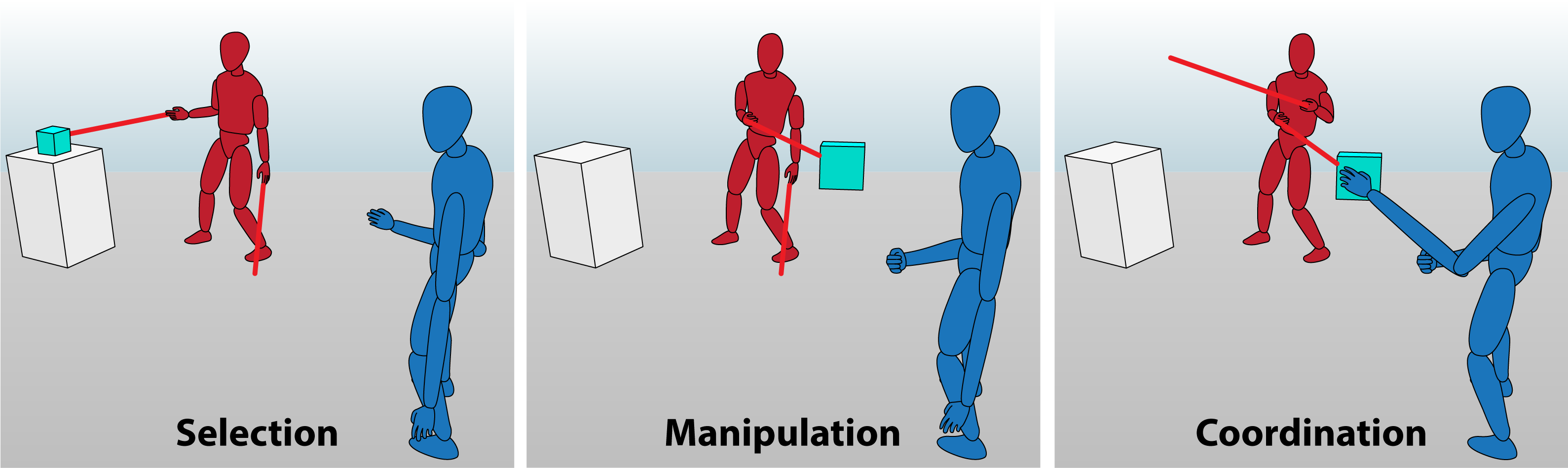

Dual Body Bimanual Coordination is an empirical study where users control and interact with the world through two bodies in virtual reality simultaneously. Users select and manipulate objects to perform a coordinated handoff between two bodies under the control of a single user. We investigate people's performance in doing this task, classify strategies for how they chose to coordinate their hands, report on sense of embodiment during the scenario, and share qualitative observations about user body schema.